AI Image Detection to Confirm Whats Real.. Like Rally Crowds

Was Kamala's crowd image real or not? Check with AI image detection.

How AI Image Detection Tools Confirm What's Real in Rally Crowds

Is this the most famous case of AI accusation? Recently, former President and current candidate Donald Trump has claimed that a photo from Kamala Harris' campaign rally at Detroit's airport was manipulated using artificial intelligence to show a larger crowd than was present. This accusation was made in a series of social media posts by Trump, where he alleged that picture of the crowd was a fake image.

These events, both the actual gathering and the claims made after, have highlighted AI detection role in verifying the authenticity of images, audio, and even crowd sizes.

Understanding AI Detection

AI detection refers to the technological methods used to recognize content that has been created or altered by artificial intelligence. This area has expanded rapidly in response to the increasing sophistication of AI-generated text, images, audio, and video. Detection systems utilize advanced algorithms and machine learning techniques to scrutinize digital content for signs of artificial creation or manipulation.

These systems are used in combating the spread of misinformation, use of deepfakes, and other deceptive AI-generated content. By identifying patterns, inconsistencies, or anomalies that may not be visible to the human eye or ear, AI detection enhances transparency and trust in digital media. The goal is not to inhibit the use of AI in content creation, but to ensure that such content is clearly identified and trustworthy while ensuring the use is for the good reasons.

AI detection is now being used across various fields, including journalism, social media moderation, KYC compliance, cybersecurity, and digital forensics.

What is AI Image Detection and How Does it Function?

AI image detection systems work by recognizing patterns and detecting anomalies. Trained on extensive datasets of both real and AI-generated content, these systems learn to distinguish between the two through subtle differences. The process generally involves several key steps:

Data Input - The system receives the content to be analyzed, real or not.

Feature Extraction - The AI dissects the content into analyzable components, such as pixel patterns in images.

Comparison - These extracted features are compared against known patterns of both human-created and AI-generated content.

Statistical Analysis - The system calculates the likelihood that the content was AI-generated by examining the presence or absence of certain features or patterns.

Decision - Based on predefined criteria, the system concludes whether the content is likely to be AI or not

Advanced systems incorporate deep learning and neural networks to enhance accuracy over time. These systems adapt to new methods of AI content generation, making them more resilient to evolving technologies.

Other Types of Content Analyzed by AI Detection

AI detection systems are designed to analyze a variety of digital content types, each with its own challenges and specialized detection methods:

Text - Systems analyze writing style, syntax, and content consistency to identify AI-generated text, often used to detect fake news, reviews, spam, or automated social media posts.

Images - Image detection examines pixel-level details, such as lighting, shadows, and facial features, to identify AI-generated or manipulated photos.

Audio - These detectors scrutinize speech patterns, background noise, and acoustic inconsistencies to detect synthetic or manipulated audio content.

Video - Video detection combines image and audio analysis techniques, while also assessing temporal consistency across frames to spot a deepfake.

Documents - AI can verify the authenticity of digital documents, detecting forgeries or alterations in official papers, contracts, or identification documents.

The Detroit Rally Crowd Case Study

Event Details of the Crowd in Question

On August 7, 2024, Kamala Harris hosted a campaign rally at Detroit Metropolitan Airport, attracting considerable attention. According to the Harris campaign, the event saw an impressive turnout of 15,000 attendees. This strong showing was viewed as a promising sign of Harris's support in Michigan, a critical swing state. The rally featured speeches focused on economic policy and climate change, topics that resonated well with local voters. The airport location was strategically chosen for its ease of access and its symbolic ties to Detroit's industrial past. The event's success was reflected in the enthusiastic crowd and media coverage, which underscored Harris's increasing momentum in the region.

Donald Trump's Claims About the Photo Being "A.I.'d"

Following the rally, a photo showcasing the large crowd circulated widely on social media. Donald Trump, in a series of posts, alleged the image was manipulated using AI to inflate the crowd size. He claimed the photo was entirely fabricated, asserting that nobody was present at the rally. Trump compared this to his own events, stating he consistently draws larger crowds. These allegations sparked a heated debate online, with Trump supporters echoing his claims and Harris supporters defending the photo's authenticity. The controversy quickly escalated, drawing national attention and raising questions about the use of AI in political campaigns.

Verification of the Image

In response to the allegations, a thorough verification process was initiated. Reporters, photographers, and video journalists from various news organizations who were present at the rally corroborated the crowd size. They provided additional photos and video footage from different angles, supporting the Harris campaign's attendance claim. The campaign also released raw, unedited footage from multiple cameras. Following in-depth analysis of the disputed photo, no evidence of AI manipulation was detecting, only minor, standard adjustments to brightness and contrast.

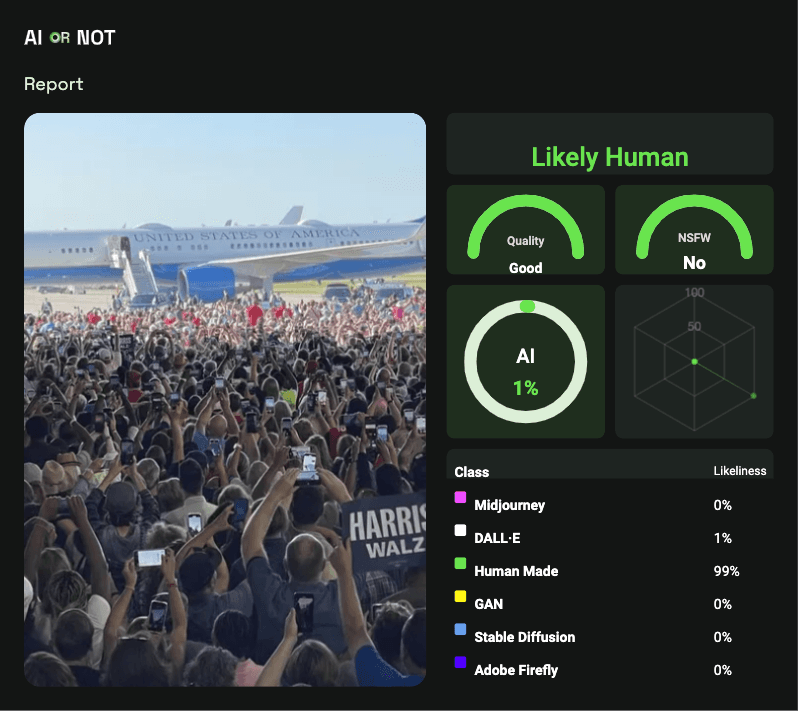

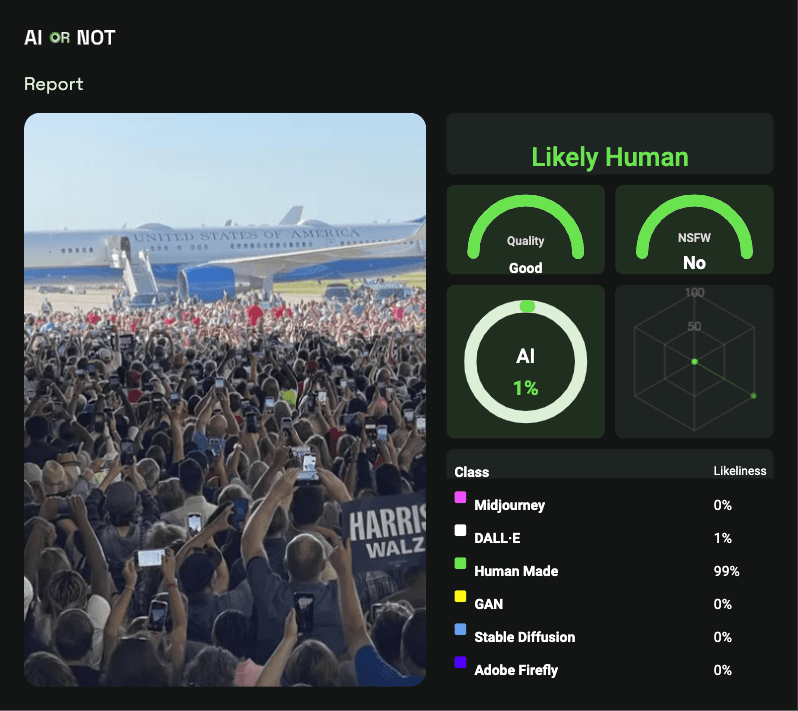

AI or Not Confirms Legitimacy of the Image

We at AI or Not, an AI image detector, further confirmed that the image was not AI-generated, adding another layer of validation. Results show there were some modifications and compression present in the photo, those were not identifiers of the image being created with artificial intelligence generators such as Stable Diffusion, Midjourney, Dall-E or others.

Dangers of AI Misinformation

The difference between real and artificial content is becoming harder to tell, creating an environment ripe for misinformation and manipulation.

A major concern is the rapid spread of AI-generated images that can fuel false narratives. These fabricated visuals can be employed to create convincing hoaxes, manipulate public opinion, or even sway political outcomes. The realism of advanced AI-generated images makes them particularly effective in influencing beliefs and emotions, especially when they are widely shared on social media.

The opposite is true as well: claims of real images being generative can have the same effects as the fakes images themselves. The misuse of AI as a defense is one where public figures falsely assert that real images or events are AI-generated, as was the case with the Detroit rally. This tactic can be used to undermine unfavorable evidence or to cast doubt on legitimate news coverage. Such claims exploit the growing public awareness of AI's capabilities, creating a "cry wolf" effect that may cause people to doubt the authenticity of all digital content, even when it is genuine.

Educating the Public on AI-Generated Images and Detection Techniques

As real and fake content becomes increasingly common, it's essential to educate the public on these technologies and how to identify them.

Key areas for public education should include:

Understanding AI Capabilities

Developing Critical Thinking

Implementing Fact-Checking Techniques

Raising Awareness of Detection Tools

Educational initiatives can take multiple forms:

School Curricula

Online Courses

Public Workshops

Media Campaigns

Collaborations with Tech Companies

The aim is to cultivate informed, critical consumers of digital content while enjoying its benefits.

The controversy surrounding the Detroit rally photo shows the impact false claims regarding AI manipulation.

The recent advancements to detect AI provide a powerful means of verifying digital content and countering misinformation. With generative AI constantly evolving, AI detectors must also continuously advance to detect images correctly.

The challenge extends beyond just developing more accurate tools; it also involves educating the public about these dangers. As the best AI becomes increasingly integrated into our daily lives, the ability to critically assess digital content will be a vital skill for everyone.

We're just starting to adjust to the new dynamic of human generated content and image generators, and detection will continue to shape our digital environment. By staying informed, supporting the development of effective detection tools, and critically evaluating digital content, we'll be able to tell once again whats real or not.